The latest addition to Infineon Technologies’ AIROC portfolio is the Airoc CYW5551x. This single device combines Wi-Fi 6 / 6E performance and advanced Bluetooth connectivity, making it suitable for a host of IoT applications.

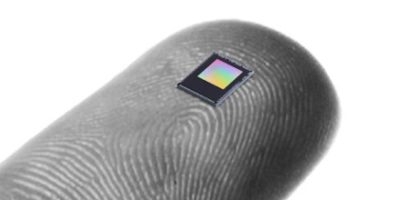

The AIROC CYW5551x Wi-Fi 6/6E and Bluetooth 5.4 family delivers secured, reliable 1×1 Wi-Fi 6 / 6E (802.11ax) connectivity that goes beyond the standard, said Infineon. This is combined with advanced low power Bluetooth connectivity. The optimised CYW55512 is a dual-band Wi-Fi 6 solution and the CYW55513 is a tri-band Wi-Fi 6 / 6E device. Both feature power-efficient designs that are suitable for use in smart homes, industrial applications, wearable devices and equipment and other small factor IoT applications, said the company.

“Infineon’s new CYW5551x family brings the range, reliability, and network robustness from our 2×2 Wi-Fi 6/6E CYW5557x family of devices to an IoT optimised family,” said Sivaram Trikutam, vice president of Wi-Fi Products of Infineon. “As part of the company’s digitalisation and decarbonisation strategy, this family is optimised for very low power consumption, making it ideal for battery-operated devices like wearables and IP cameras,” he said. They are also tuned for best performance across a wide temperature range, enabling them to serve industrial and infrastructure applications such as electric vehicle charging and solar panel controls, logistics.

The AIROC CYW551x family offers support for the 6GHz band for Wi-Fi 6E, delivering lower latency and reduced interference. Bluetooth 5.4 low energy (LE) with Audio is range and power optimised with up to 20dBm transmit power.

Other features include improved multi-layer security (PSA Level 1-certifiable) and design versatility supported by a wide ecosystem of module and platform partners.

The devices feature Linux, RTOS and Android support, and have a fully validated Bluetooth stack and sample code to accelerate development time.

Infineon’s AIROC CYW55512 and CYW55513 are sampling now. The CYW5512 will be commercially available in March 2024, and the CYW55513 will be commercially available in June 2024.