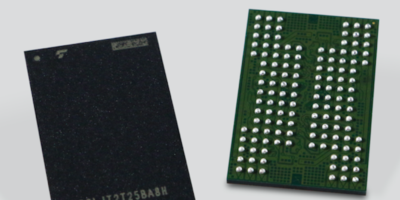

Kioxia Europe has developed its fifth-generation BiCS FLASH, three-dimensional (3D) flash memory with a 112-layer vertically stacked structure. Samples of the 512Gbit (64Gbyte) capacity devices with three-bit-per-cell (triple-level cell, TLC) technology, for specific applications will begin shipping Q1 2020.

The memory addresses a variety of applications, including traditional mobile devices, consumer and enterprise SSDs as well as emerging applications enabled by the new 5G networks, artificial intelligence and autonomous vehicles.

The process technology will be applied to larger capacity devices, such as 1Tbit (128Gbytes) TLC and 1.33Tbit four-bit-per-cell (quadruple-level cell, QLC) devices in due course, confirms Kioxia.

The company’s 112-layer stacking process technology combines with its circuit and manufacturing process technology to increase cell array density by approximately 20 per cent over the 96-layer stacking process. The technology reduces the cost per bit and increases the manufacturability of memory capacity per silicon wafer, adds Kioxia. It also improves interface speed by 50 per cent and offers higher programming performance and shorter read latency.

Fifth-generation BiCS FLASH was developed jointly with technology and manufacturing partner Western Digital Corporation. It will be manufactured at Kioxia’s Yokkaichi plant and the newly built Kitakami plant.

Kioxia Europe (formerly Toshiba Memory Europe) is the European subsidiary of Kioxia, a supplier of flash memory and solid state drives (SSDs). From the invention of flash memory to today’s breakthrough BiCS FLASH 3D technology, Kioxia says it continues to pioneer memory solutions and services. The company’s 3D flash memory technology, BiCS FLASH, is shaping the future of storage in high-density applications, says Kioxia, including advanced smartphones, PCs, SSDs, automotive and data centres.