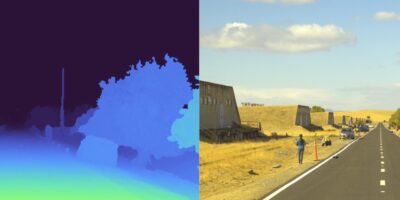

High resolution visualisation in the Aurelion sensor simulation suite allows functions for autonomous driving to be tested and validated. Developed by dSpace, it can be operated either in the cloud or locally. It generates photo-realistic images in real time for camera simulation. It uses ray tracing to create an exact environment for radar and lidar simulation. Developers can use Aurelion to validate algorithms for autonomous driving by means of simulation during virtual test drives, long before a prototype hits the road, advised dSpace.

The simulation can be used throughout all phases of the development process, for example, software-in-the-loop (SIL) testing, hardware-in-the-loop (HIL) testing, or simultaneous validation in the cloud. Aurelion supports the development of functionalities and training data based on AI, including neural network training and testing.

A powerful 3-D rendering engine, high-precision dSpace simulation models and realistic 3-D assets, such as pedestrians or vehicles, enable accurate simulation of sensors, environments, weather conditions, light conditions (day, night), and materials, said dSpace. Developers can simulate a wide range of scenarios and test corner cases that very rarely occur in real test drives.

Aurelion draws on an extensive library of sensor models that is always updated, said the company, allowing new sensors to be replicated in simulation solutions long before they are launched on the market. dSpace has entered into co-operation agreements with leading sensor manufacturers and is continuously expanding its collaboration with developers of camera, lidar, and radar technologies. There is also the option of integrating third-party sensor models.

Aurelion bundles functionalities from dSpace MotionDesk and SensorSim into one single product, and also provides extended functionalities and more precision in the field of sensor simulation. In combination with other dSpace tools, such as the ASM simulation models, the VEOS simulation platform, and ModelDesk for parameterisation, dSpace said it provides an end-to-end solution for testing and validating the autonomous vehicle stack.

dSpace provides simulation and validation solutions worldwide for developing connected, autonomous, and electrically powered vehicles. The company’s range is used by automotive manufacturers and their suppliers to test the software and hardware components in new vehicles before a new model is allowed on the road. As well as vehicle development, the company works with engineers in aerospace and industrial automation.

The company is headquartered in Paderborn, Germany and serves customers through regional dSpace companies in the USA, the UK, France, Japan, China, Croatia, and South Korea.