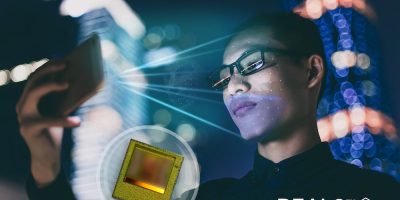

A reference design developed by Infineon Technologies, working with Qualcomm Technologies is for 3D authentication based on the Qualcomm Snapdragon 865 mobile platform.

The reference design uses the REAL3 3D time of flight (ToF) sensor and enables a standardised integration for smartphone manufacturers.

At CES 2020, Infineon introduced the 4.4 x 5.1mm ToF sensor, describing it as the world’s smallest yet most powerful 3D image sensor with VGA resolution. It can be used for face authentication, enhanced photo features and authentic augmented

Andreas Urschitz, division president power management and multimarket at Infineon, commented: “3D sensors enable new uses and additional applications such as secured authentication or payment by facial recognition. We continue to focus on this market and have clear growth targets”.

Infineon develops the 3D ToF sensor technology in co-operation with the software and 3D time-of-flight system specialist pmdtechnologies.

From this month, Infineon’s REAL3 ToF sensor will enable the video bokeh function for the first time in a 5G-capable smartphone for optimal image effects even in moving images. Using the precise 3D point cloud algorithm and software, the received 3D image data is processed for the application. The 3D image sensor captures 940nm infrared light reflected from the user and the scanned objects. It also uses high-level data processing to achieve accurate depth measurements. The patented SBI (Suppression of Background Illumination) technology offers a wide dynamic measuring range from bright sunlight to dimly lit rooms for robust operation without loss of data processing quality.

pmdtechnologies is a fabless IC company headquartered in Siegen, Dresden and Ulm with subsidiaries in the USA, China and Korea. It claims to be the leading 3D ToF CMOS-based digital imaging technology supplier. Founded in 2002, the company owns over 350 worldwide patents concerning pmd-based applications, the pmd measurement principle and its realisation. The company operates in industrial applications.