In the automotive and transportation marketplace, large battery stacks provide high output power without producing harmful emissions (that is, carbon monoxide and hydrocarbons) associated with gasoline-powered combustion engines. Ideally, each individual battery in the stack equally contributes to the system. However, when it comes to batteries, all batteries are not created equally. Even batteries of the same chemistry with the same physical size and shape can have different total capacities, different internal resistances, different self-discharge rates, etc. In addition, they can age differently, adding another variable in the battery life equation.

A battery stack is limited in performance by the lowest capacity cell in the stack; once the weakest cell is depleted, the entire stack is effectively depleted. The health of each individual battery cell in the stack is determined based on its state of charge (SoC) measurement, which measures the ratio of its remaining charge to its cell capacity. SoC uses battery measurements such as voltage, integrated charge and discharge currents, and temperature to determine the charge remaining in the battery. Precision single-chip and multichip battery management systems (BMS) combine battery monitoring (including SoC measurements) with passive or active cell balancing to improve battery stack performance. These measurements result in:

X Healthy battery state of charge independent of the cell capacity

X Minimised cell-to-cell state of charge mismatch

X Minimised effects of cell ageing (ageing results in lost capacity)

Passive and active cell balancing offer different advantages to the battery stack and Analog Devices offers solutions in our battery management product portfolio for both methods. Let’s first examine passive balancing.

Passive Balancing Allows All Cells to Appear to Have the Same Capacity

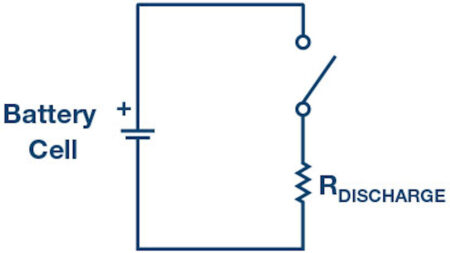

Initially, a battery stack may have fairly well matched cells. But over time, the cell matching degrades due to charge/discharge cycles, elevated temperature, and general ageing. A weak battery cell will charge and discharge faster than stronger or higher capacity cells and thus it becomes the limiting factor in the run-time of a system. Passive balancing allows the stack to look like every cell has the same capacity as the weakest cell. Using a relatively low current, it drains a small amount of energy from high SoC cells during the charging cycle so that all cells charge to their maximum SoC. This is accomplished by using a switch and bleed resistor in parallel with each battery cell.

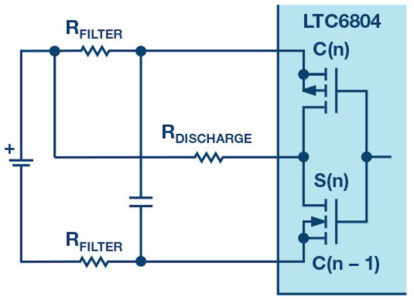

Figure 1. Passive cell balancer with bleed resistor.

The high SoC cell is bled off (power is dissipated in the resistor) so that charging can continue until all cells are fully charged.

Passive balancing allows all batteries to have the same SoC, but it does not improve the run-time of a battery-powered system. It provides a fairly low cost method for balancing the cells, but it wastes energy in the process due to the discharge resistor. Passive balancing can also correct for long-term mismatch in self discharge current from cell to cell.

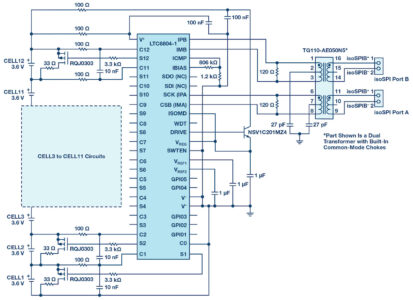

Figure 2. LTC6804 application circuit with external passive balancing.

Multicell Battery Monitors with Passive Balancing

Analog Devices has a family of multicell battery monitors that include passive cell balancing. These devices feature a stackable architecture, allowing hundreds of cells to be monitored. Each device measures up to 12 series of connected battery cells with a total measurement error of less than 1.2 mV. The 0 V to 5 V per cell measurement range makes them suitable for most battery chemistries. The LTC6804 is shown in Figure 2.

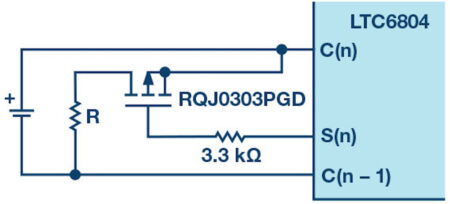

The LTC6804 features internal passive balancing (Figure 3) and can also be configured with external MOSFETs if desired (Figure 4). It also has an optional programmable passive balancing discharge timer that allows the user more system configuration flexibility.

Figure 3. Passive balancing with internal discharge switch.

Figure 4. Passive balancing with external discharge switch.

For customers that wish to maximise system run-time and charge more efficiently, active balancing is the best option. With active cell balancing, energy is not wasted, but rather redistributed to other cells in the stack while both charging and discharging. When discharging, the weaker cells are replenished by the stronger cells, extending the time for a cell to reach its fully depleted state. For more on active balancing, see the technical article “Active Battery Cell Balancing.”

About the Authors

Sam Nork has worked for Analog Devices’ Power Products Business Unit (previously Linear Technology) since 1988. As a general manager and design director, Sam leads a development team of over 120 engineers focused on battery charger, ASSP, PMIC, and consumer power products. He has personally designed and released numerous portable power management integrated circuits, and is inventor/co-inventor on 11 issued patents. Prior to joining Linear Technology, Sam worked for Analog Devices in Wilmington, MA as a product/test development engineer. He received A.B. and B.E. degrees from Dartmouth College. He can be reached at sam.nork@analog.com.

Kevin Scott works as a product marketing manager for the Power Products Group at Analog Devices, where he manages boost, buck-boost, and isolated converters, as well as drivers and linear regulators. He previously worked as a senior strategic marketing engineer, creating technical training content, training sales engineers, and writing numerous website articles about the technical advantages of the company’s broad product offering. He has been in the semiconductor industry for 26 years in applications, business management, and marketing roles.

Kevin graduated from Stanford University in 1987 with a B.S. in electrical engineering and started his engineering career after a brief stint in the NFL. He can be reached at kevin.scott@analog.com.