THine Solutions have announced the new THEIA-CAM 13MP PDAF camera, THSCJ101, for the NVIDIA Jetson Orin NX and Jetson Orin Nano platforms. Along with its pre-optimised ISP firmware and Linux driver, Jetson users are now able to easily integrate advanced imaging capabilities into their systems.

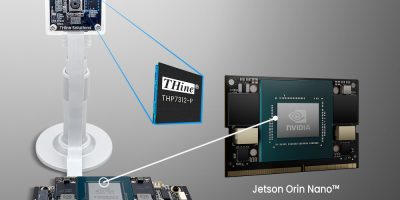

The THSCJ101 kit is a camera reference design kit for embedded camera applications that are using the NVIDIA Jetson Orin NX or the Jetson Orin Nano platform. The THSCJ101 kit is based on THine’s THP7312-P ISP and Sony’s IMX258 13MP CMOS PDAF image sensor.

THine’s optimised ISP firmware provides ultra-quick autofocus using Phase Detection Autofocus (PDAF) technology and best-in-class image quality. The kit hardware includes all items required to interface with Jetson Orin carrier boards with a 22-pin MIPI CSI-2 input connector, including a camera board in an acrylic case and a flat flexible cable.

The Video4Linux2 (V4L2) driver for the THSCJ101 is also available to control various video functions. The performance of each kit is repeatable for use in high volume production due to our production process to characterise the image parameters of each image sensor and to calibrate the image signal processing to compensate for variation from sensor to sensor.

All technical information including the reference circuit schematics, ISP firmware, and V4L2 Driver are available to customers.

Also, for customers that require unique image performance features, THine can provide a GUI based software development tool that customises the ISP firmware and/or the image sensor selection. As a result, the THSCJ101 can accelerate NVIDIA Jetson Orin NX/Nano platform users’ time-to-market without expensive integration cost or additional effort for developing embedded camera systems.

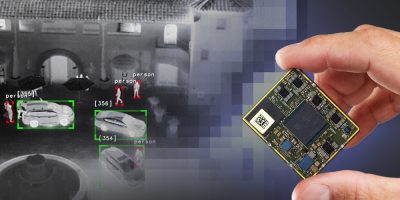

THEIA-CAM is designed for embedded vision systems. Using and optimising THine’s own ISP, THine offers Best-in-Class image quality and high production quality suitable for any project phase from proof of concept to high volume production. THEIA-CAM supports various operating systems including Windows, macOS, Android, and Linux, and various platforms including Raspberry Pi, Jetson Orin, i.MX 8M families, and MediaTek Genio series. THEIA-CAM addresses wide-ranging camera applications including but not limited to AI + IoT devices, AR glasses, barcode reading devices, biometric devices, bodycams, document scanners, machine vision systems, medical scopes, microscopes, surveillance cameras, vision assistance glasses, and webcams. THSCU101, the first Kit in the family, is a 13MP PDAF USB video class (UVC) Camera