Customisation is brought to AI, machine learning and high performance computing (HPC) applications with the Universal GPU server system, said Super Micro Computer. According to the company the “revolutionary technology” simplifies large scale GPU deployments and is a futureproof design that supports yet to be announced technologies.

The Universal GPU server combines technologies supporting multiple GPU form factors, CPU choices, storage and networking options optimised to deliver configured and highly scalable systems for specific AI, machine learning and HPC with the thermal headroom for the next generation of CPUs and GPUs, said the company.

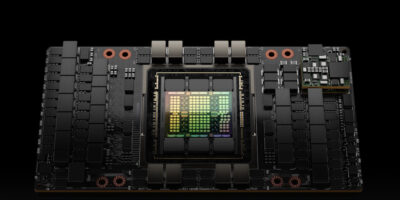

Initially, the Universal GPU platform will support systems that contain the third generation AMD EPYC 7003 processors with either the MI250 GPUs or the Nvidia A100 Tensor Core 4-GPU, and the third generation Intel Xeon Scalable processors with built-in AI accelerators and the Nvidia A100 Tensor Core 4-GPU. These systems are designed with an improved thermal capacity to accommodate up to 700W GPUs.

The Supermicro Universal GPU platform is designed to work with a wide range of GPUs based on an open standards design. By adhering to an agreed upon set of hardware design standards, such as Universal Baseboard (UBB) and OCP Accelerator Modules (OAM), as well as PCI-E and platform-specific interfaces, IT administrators can choose the GPU architecture best suited for their HPC or AI workloads. In addition to meeting requirements, this will simplify the installation, testing, production, and upgrades of GPUs, said Super Micro Computer. In addition, IT administrators will be able to choose the right combination of CPUs and GPUs to create an optimal system based on the needs of their users.

The 4U or 5U Universal GPU server will be available for accelerators that use the UBB standard, as well as PCI-E 4.0, and soon PCI-E 5.0. In addition, 32 DIMM slots and a range of storage and networking options are available, which can also be connected using the PCI-E standard.

The Supermicro Universal GPU server can accommodate GPUs using baseboards in the SXM or OAM form factors that use high speed GPU-to-GPU interconnects such as Nvidia NVLink or the AMD xGMI Infinity fabric, or which directly connect GPUs via a PCI-E slot. All major current CPU and GPU platforms will be supported, confirmed the company.

The server is designed for maximum airflow and accommodates current and future CPUs and GPUs where the highest TDP (thermal dynamic performance) CPUs and GPUs are required for maximum application performance. Liquid cooling options (direct to chip) are available for the Supermicro Universal GPU server as CPUs and GPUs require increased cooling.

The modular design means specific subsystems of the server can be replaced or upgraded, extending the service life of the overall system and reducing the e-waste generated by complete replacement with every new CPU or GPU technology generation.