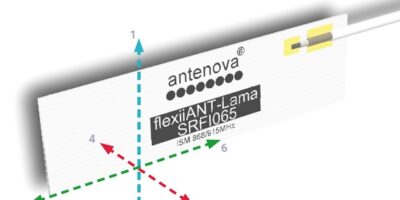

Able to operate in both Europe’s 868 MHz bands and the US 915 MHz bands, Lama is a small, dual-band antenna from Antenova.

The Lama (part number SRFI065) was developed for small, connected devices operating on the LP-WAN networks including LoRa, Sigfox, Wi-SUN and MIoTy. It uses the ISM (industrial, scientific and medical) frequencies in Europe and America or both, which means that one product design can be sold in both US and European markets.

The Lama antenna is suitable for small, networked devices operating across wide geographic areas in IoT applications, especially in smart agriculture, smart cities and tracking, advised Antenova.

The small antenna is suitable for designs where real estate is limited. The flexible printed circuit (FPC) antenna measures 35 x 10 x 0.1 mm and is supplied with a standard 100mm RF cable with I-PEX MHF connector. This shape allows it to be mounted several different ways in a design.

The antenna performed equally well in tests for the 868 and 915MHz bands, reported Antenova, showing a peak efficiency at 60 per cent and maximum VSWR (voltage standing wave ratio) of 1.5:1 for both frequency bands.

The LP-WAN networks, also known as ISM, use frequencies 863 to 870MHz in Europe and 902 to 928MHz in the US. They are licence-free bands which are typically used to connect large fleets of low powered devices which transmit small packets of data across large physical distances at low bit rates.

Typical agricultural application examples are those using cloud-connected sensors to measure ground moisture or temperature or to track livestock, e.g. cattle.

In smart cities it is suitable for remote-controlled applications such as meter reading, environmental monitoring and connected services, controlling street lighting, parking sensors or waste bins.

In other IoT sectors, the Lama antenna fits commercial applications in cold chain transport, distribution, logistics and tracking of goods and containers.

Antenova’s Michael Castle commented: “The Lama antenna targets . . . growing markets which will need large volumes of devices on all continents of the world. For example, we estimate there could be 45 million connected street lights worldwide by 2025.”

Antenova provides consultancy and testing services and a selection of online tools and calculators to help designers achieve a successful integration and a high performance wireless device.